Metadata enrichment through natural language processing

Chris Olver, Metadata Creator, Georgian Papers Programme, King’s College London

My role based in King’s College London Archives has been to explore and implement ways of improving accessibility to the Georgian digital records by the Royal Archives. This work has broadly broken down into three distinct work paths: surveying and researching the historical database digital landscape; indexing and metadata enrichment; and investigating automatic handwritten transcription. In this essay I will be focusing on my ongoing work into indexing the GPP digital records; and looking into some of the ways in which digital humanities provides useful tools for this work.

The initial stage of this work was to understand the digital environment in relation to historical databases that presented 18th century records to view how records could be searched and accessed. This survey looked at over 42 different sites developed by archivists, librarians and academics to see the commonality of how to display and navigate digital historical content. The main finding of the survey was that multiple pathways were available to search the websites’ contents, these included: keyword search which often broadened out to use advance search parameters (proximity/Boolean); some form of refining of search results through the inserting of additional terms or the use of facet options [ability to select material through index terms] or the ability to browse records, typically through index terms [names, places, subjects]. These findings were reinforced through conducting interviews with members serving on the GPP Academic Steering Group who expressed how highly prized it was to have a flexible and user friendly search interface.

The survey results cemented the belief that one of the main ways to enhance the core cataloguing metadata being created by the Royal Archives was to enrich these records with additional index terms. The key question was what form these index terms would take: there are various appropriate standards relating to personal names, organisations, places and subject headings in existence within the archives, libraries and museum sector. With the ambition of the final site to be available to a global audience, there is a necessity to address some of the regional variations that arise between them. The issue was further complicated by the desire of the Royal Collection Trust to harmonise the archives, library and museum collections standards to facilitate future integration. Due to the difficulty, it was decided that the best solution would be to create “working” indexes that contained raw terms that could be later refined into the correct format once all the partners had fully agreed on the correct standards.

Initially, I concentrated on collecting personal names first as these were ready available and the variation between naming authorities was largely syntactic and could be amended at a later date. The potentially useful names were relatively easy to find with a number of online sites presiding standardised names for 18th century notables that could be collected for a master list, notably from online catalogues and historical databases, such as Parliamentary Papers Online, Electronic Enlightenment and the catalogues of the British Library and National Archives. As the majority of the records remained uncatalogued this seemed like the best method to gather potentially useful names that could be at a later date matched with Royal Archives catalogue records, potentially by using a an automated tool.

Indexing place names can be a relatively simple process as most standards only require the name and longitude and latitude co-ordinates. Difficulties may occur depending on the granularity of the indexing: towns and cities are relatively well reference however streets or historic houses may be difficult as they may no longer exist or changed locations. However, sites do exist that contain place name and co-ordinates for historical places in the United Kingdom. Place names can be harvested from existing online indexes and printed catalogue references and then can either be manually matched with existing records on Getty Database of Places Names or automatically matched using programmes such as OpenRefine.

The creation of subject indexing is the most challenging aspect of indexing the Georgian Papers. This is because unlike personal names and place names there requires a considerable amount of selection at various stages of the process, such as should you use an existing classification system such as United Kingdom Archival Thesaurus (UKAT) or Library of Congress Subject Headings (LCSH) or develop your own index system based on the natural language that you would find within the actual documents? Either method has inherent risks in potentially obscuring records through too broad classification or too specific a terminology. Further complications arise due to the fact that cataloguing and digitisation of the records is ongoing for the projects length so any solution going forward will have to be a compromise as to what subject indexing will be of greatest use to users for the meantime.

Due to the reasons above, I have been exploring the possibility of using natural language processing of the available primary source literature as the basis of subject indexing. While the Georgian Papers Programme will be mainly digitising unpublished material, the published correspondence and other Royal Papers potentially will provide a broad indication of useful terms for the whole digital collection. The published correspondence of George III by John Fortescue were digitised by the Royal Archives and transcripts obtained using the OCR model available on the Transkribus platform. The six volumes are not exhaustive however due to the sheer scale of the publication with 3325 pages and over million words, they prove to be useful resource for finding valid keywords in the Archives. At a later date, it will be possible to analyse transcripts from other published primary sources such as the correspondence of George IV by A Aspinall, diaries of Queen Charlotte and other Royal Household accounts. In addition, transcripts will be available from the Georgian Papers itself through corrected handwritten transcription recognition documents.

The analysis of these transcripts depends on using natural language processing tools to be able to identify useful terms and names for indexing. I have experimented with a number of tools but will focus on only a couple before exploring next stages of the development of subject indexing.

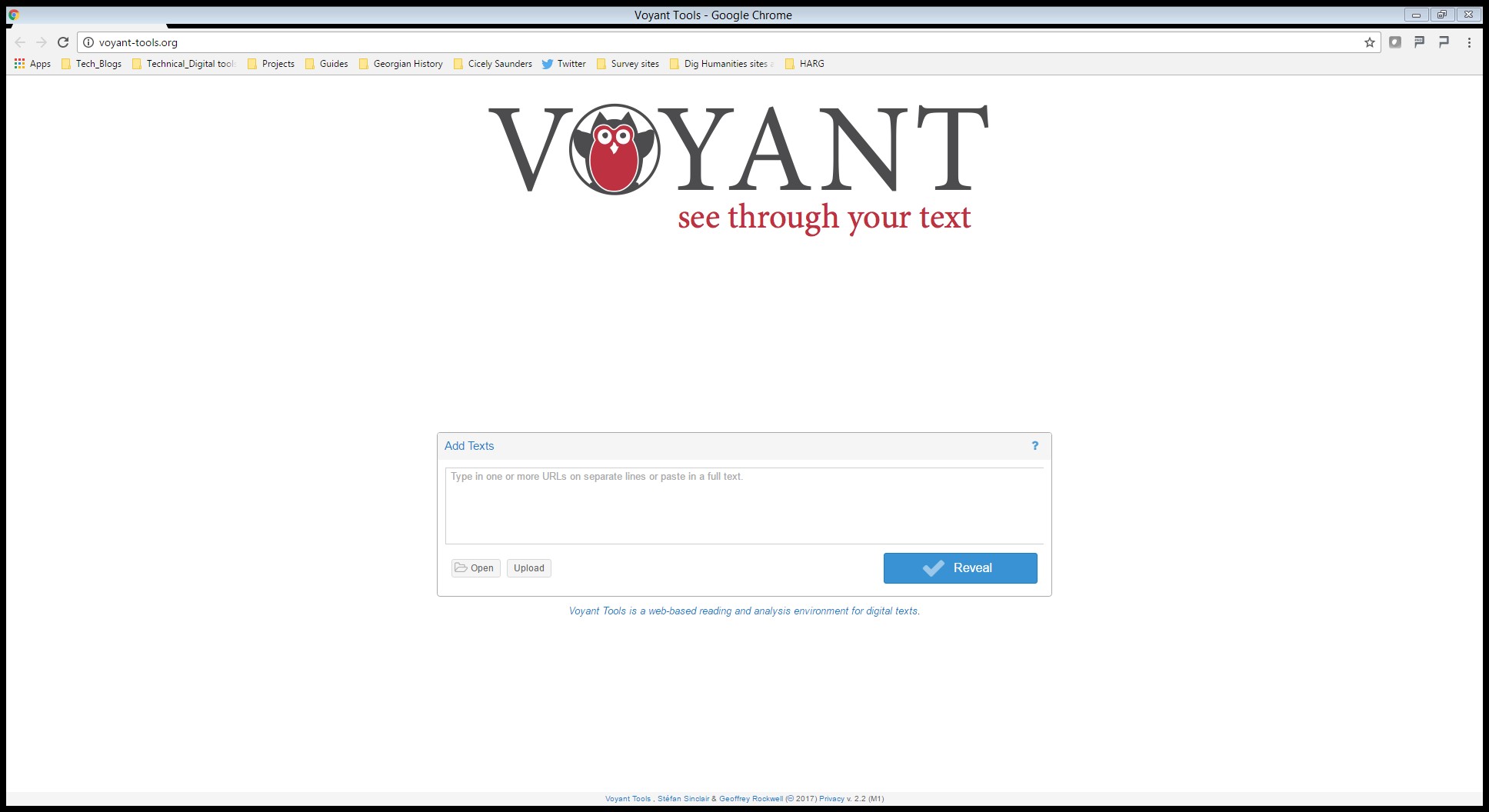

This open source programme provides a simple and effective way of displaying word frequency used within documents. Below is an example of terms extracted from part of a volume of the Fortescue Volume 1. As you can see the most frequently used words are mostly definite articles, propositions, verbs and adjectives which are not of particular interest in isolation. A look at the nouns begins to show the potential use of the tool in finding terms for subject indexing. Examples.

Figure 1 Voyant Tools: Web based text analyser which allows lexical analysis including the study of frequency and distribution data.

Whilst the results do require amount of cleaning they do begin to show how this form of analysis can be refined for finding useful terms for indexing. Another potential tool that would provide better results without the need for extensive post-processing would be using a topic modelling tool such as MALLET, which can recognise topics within texts rather than just count word frequencies.

GATE (General Architecture for Text Engineering)

General Architecture for Text Engineering or GATE is a Java suite of tools originally developed at the University of Sheffield. It is an open source software which allows a variety of natural language processing tasks, including information extraction and semantic annotation. Using the ANNIE (a Nearly-New Information Extraction System) to process a sample of the OCR transcript from Fortescue it was possible to obtain names, locations and organisations within the text. The results provided 552 names for the volume tested.

Figure 2 Homepage for GATE (General Architecture for Text Engineering): GATE provides multiple natural language processing tools.

Although this work is very early days, the initial results of using digital tools to analyse digital records shows great possibility of creating useful metadata content through natural language processing. The benefits of using natural language processing is to identify quickly useful names, locations and organisations within the records. It also provides the necessary linguistic tools to identify keywords within a selected corpus of documents which will provide the bedrock of subject indexing. While these techniques may not be applicable to some archival cataloguing projects with over 350,000 digital images been generated in the next three years an automated solution feels a pragmatic approach to indexing the Royal Archives’ Georgian papers collection.